The gap between what we know and what we do in education lies at the heart of a global learning crisis. This Education.org white paper argues that it is not the lack of new research that is the greatest obstacle to progress, but the failure to use what we already know.

It draws upon a critical comparison of the education and health sectors’ use of evidence for decision-making, building on interviews with leaders of education knowledge organisations. It identifies what can be done to accelerate improvements in education by making more effective use of the evidence that should be driving education policy and practice.

In the past forty years, the health sector has turbocharged the quantity and quality of research available, but its success is a result of much more than volume alone. Sector-wide capacity exists to create comprehensive and systematic syntheses of evidence developed through trust-building processes that strive for independence and transparency. These syntheses are the foundation for actionable guidance that is user-centred, reflecting actual challenges of policymakers and practitioners. Results are communicated by dedicated translation specialists so that evidence is shared beyond a narrow group of experts. Over time, the process has become more inclusive with greater awareness of gender and ethnic diversity. Underpinning this approach is effective coordination and alignment of incentives and culture across the health sector communities of research, policy and practice. The result is that doctors don’t need to “try and guess what the evidence says about particular forms of care”.1

In the education sector, despite huge progress and many important initiatives, critical parts of this knowledge infrastructure are still either missing or nascent. The communities of research, policy and practice are often independent islands of activity making worthy, but uncoordinated, attempts to bridge the gaps. According to our analysis, the health sector undertakes 26 times more synthesis work than education: for every synthesis developed in education, health produces 26 times more syntheses. In addition to this volume gap, education syntheses, in comparison to the health sector, are sporadic, incomplete, and less likely to be connected to the challenges of policy and practice. This means that existing education research is rarely translated into actionable guidance and used by policymakers and practitioners. Teachers and education policymakers, unlike doctors, are often left to guess at what the evidence says.

This white paper calls for an “Education Knowledge Bridge” to enable the capacity for comprehensive and up-to-date syntheses, leading to clear policy guidance that can be implemented at scale.

To be effective, this new Education Knowledge Bridge must:

- Be user-centred: Reflecting actual challenges of policy and practice.

- Reinforce core education systems: Instead of encouraging more parallel pilots of “silver bullets”.

- Be trusted: Ensuring independence and transparency at every stage.

- Leverage networks: To support implementation.

- Prioritise equity: To ensure that evidence reaches those in greatest need.

Through the good work of many organisations, critical building blocks for this knowledge bridge already exist.

This white paper is a call to work together to transform these building blocks into a fully functioning Education Knowledge Bridge that tackles the global learning crisis by making more use of the evidence that we already have.

Key facts

▶ US$ 4.7 trillion: Annual global education expenditure (source: GEM Report, 2019)

▶ US$ 8.3 trillion: Annual global health expenditure (source: WHO, 2020)

▶ 1.75x: Health to education expenditure ratio

▶ 860 syntheses produced annually in the education sector (source: Education.org analysis)

▶ 22,000 syntheses produced annually in the health sector (source: Education.org analysis)

▶ 26x: Health to Education synthesis ratio

See appendix E for a Methodological note of this analysis

1. A school report card for “our world”

At the end of term, in a ritual passed down over centuries, teachers have written school report cards for each student. They have ranged from the plainly wrong: “He will never amount to anything” of Albert Einstein, to underestimation: “He will either go to prison or become a millionaire” of the billionaire Sir Richard Branson, to the underhanded: “The improvement in handwriting has revealed an inability to spell” of an unknown victim of the teacher’s pen!

So, today, what would a school report card for the education systems of “Our World” look like?

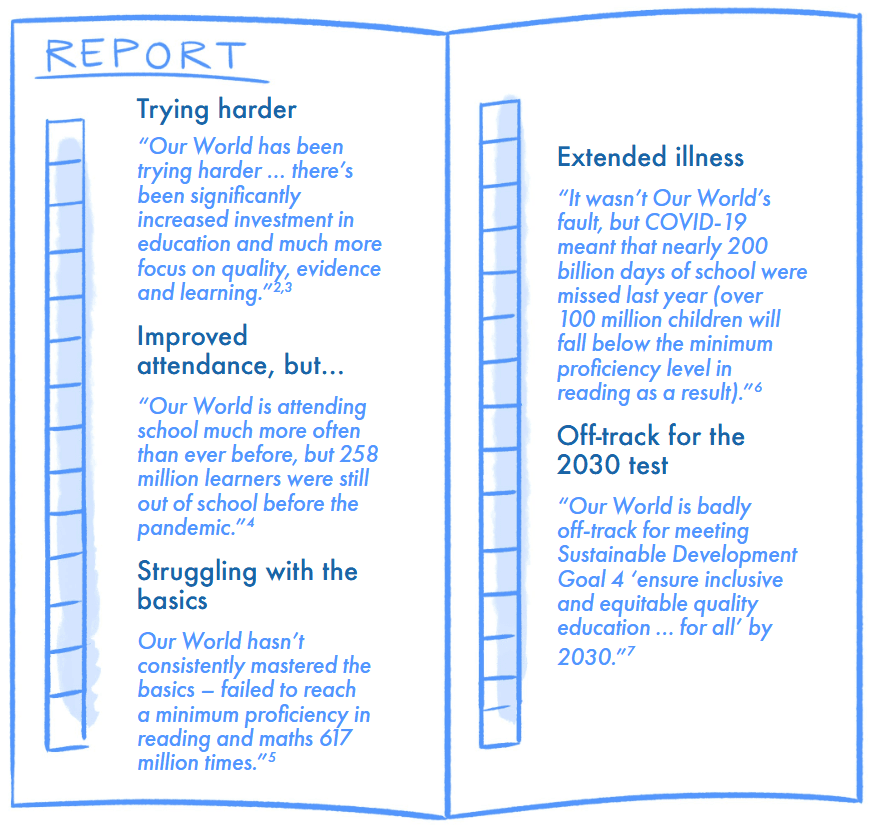

Image 1. School report

While we’ve made huge progress in educating Our World, we can’t possibly be satisfied with this report card yet. This situation has been described as a learning crisis. The life-long loss of human potential for millions is unimaginable. Fortunately, there’s plenty of good advice on ways to help Our World do better at school:

Use evidence to guide policy.

“The international sector invests heavily in research, yet we must do a better job of understanding and using the data”, says Julie Cram, Deputy Assistant Administrator for Economic Growth, Education and Environment, USAID.

“Unfortunately, too little of this knowledge makes it into education policy and subsequent implementation. Some of the most proven approaches remain overlooked and underfunded, while money continues to be spent on other, much less effective, practices and interventions”, says the International Commission on Financing Global Education Opportunity (the “Education Commission”).3

Move from evaluation for accountability to evaluation for impact.

“Development actors spend upwards of US$2.5 billion annually performing program monitoring and evaluation, yet staff in donor and government agencies report little to no utilization of this data for decision-making”, says Development Gateway.8

Focus more on equity and meeting student needs.

“School systems are not adapting to the culture, territory, identity and characteristics of students”, according to Javier Gonzalez, Director, SUMMA.

Improved learning and more effective use of resources require drastic improvements in education system performance.

“A major leadership push is needed”, according to an essay by Nick Burnett, a senior fellow at Results for Development.9

Put bluntly, we need to bridge the gap between what we know, or could know from the research that is available, and what happens in schools as a result.

While the gap is widest for vulnerable and marginalised children - especially girls, minorities, those in poverty, and children with learning differences and special needs - this is not just a challenge for a handful of countries. Across the world, children are sitting in schools and other non-formal learning environments, disengaged or struggling. Our education systems are usually designed for the “average” student, so they often fail to meet the needs of the majority who need to go faster, slower, further or somewhere a little different.

These 617 million children (that is 60% of all children and adolescents globally, as estimated by ) can be found in every part of the world.5 At times of crisis, as with a pandemic, the ability of systems to use evidence to adapt resiliently becomes even more important.

This gap is not new and there are many calls for change. Leaders at global and country level are clear about the role that evidence could have on accelerating improvement:

Global leaders are calling for investment in public goods and sharing.

“By developing the infrastructure needed to share knowledge across borders, best practices and effective innovations can spread to new geographies, and local, national, and regional actors with similar experiences can collaborate in a way that propels everyone forward”, according to the International Commission on Financing Global Education Opportunity.10

Decision-makers want relevant guidance, not conflicting pieces of the puzzle.

“Research studies on a single education intervention contribute to our knowledge, but single studies alone are not enough to learn what works, where and for whom”, according to the leader of a global knowledge organisation.

Country leaders are calling for a more inclusive and contextualised understanding of evidence.

“It is quite common for people to make policies based on gut feeling or history or their perception … We want to use evidence to inform our policy. Evidence can come from other countries and from local experience. We actively test innovations and their potential to scale. Also, citizen engagement in building and testing innovations is a principle we care about”, according to Dr David Moinina Sengeh, Minister of Basic and Senior Secondary Education in Sierra Leone.11

Country and local leaders are asking for evidence to be generated for their use.

“We have many groups producing and interested in evidence, but we lack coordination of their work, and often it is not reflecting national priorities. What parties are generating should be informing our critical gaps”, according to a senior policy official at a government ministry.

Leaders are calling for help in curating evidence.

“We need to move beyond the situation today where too much evidence is not visible or understandable, not relevant, not actionable, siloed, driven by donor agendas or interventions, often duplicative”, says Suzanne Grant Lewis, Director of .

These calls to action have not gone unheard. Growing sums of money are flowing into bilateral and pooled funds with innovation, knowledge and learning as rallying calls. But, as Larry Summers, former Chief Economist of the World Bank, observes in his foreword to Investing in Knowledge Sharing to Advance 4: “too often, increased investment serves only to support methods and existing institutions that have been ineffective”10. So, while more research is necessary, welcome and overdue, “the use of research evidence has not kept up… leading to wasted opportunities and even harm for the education system and its users”, according to Professor Stephen Gorard of Durham University.12

This is a moment to reflect. With COVID-19 throwing 4 even further off track, simply repeating more vigorously the same old recipe is not an effective solution to an entrenched challenge. New approaches are needed to address the learning crisis without depending on new funding alone. By more comprehensively and systematically marshalling the world’s knowledge and evidence, scarce resources can be put to more effective use to advance equity, access, and outcomes for students everywhere.

This white paper asks what we must do to bridge the knowing-doing gap so that we can improve Our World’s school report card. It is a direct response to multiple calls from system leaders across governments and civil society, at global and national levels, to use evidence more effectively to address the learning crisis. It is written with the belief that this crisis is profound, touches all corners of the globe, and that all children should have access to a good quality education.

Methodology

This white paper draws upon:

▶ a critical comparison of the education and health sectors conducted by Education.org;

▶ 80 interviews and informal discussions with leading global knowledge actors (organisations that focus on the production, analysis, synthesis and/or dissemination of knowledge), supplemented by a small number of major regional players;

▶ case studies to understand the role and contribution of organisations currently working in issues relating to this white paper;

▶ a review of more than 45 organisations, 80 major reports and 8 collaborative knowledge initiatives in education.

2. Knowledge to policy and practice: comparing health and education

In education, we often look towards the health sector for inspiration.

Education.org undertook this exploration to discover important differences between the ways that the health and education sectors work with evidence. Our goal was not to find a “copy and paste” solution, nor to underplay many challenges in the health sector, but to stimulate critical thinking about how to accelerate progress in education.

Other sectors have also made dramatic changes in their use of data and evidence, for example, in aviation safety and climate change.13 However, health stands out as a comparative reference for education because both fields are people focused, represent major parts of government budgets, and have outcomes affected by stakeholders and context, such as the role of parents.

There are substantial differences, of course. Education does not have diseases, pills, or cures. A quick blood test can reveal COVID-19 antibodies, but it cannot tell us if a child understands calculus. Yet, there are also many similarities. Patients and students are people. Two patients/students can respond very differently to the same intervention, and doctors/teachers often need the skills of a social worker to understand what is most likely to work.

Health is far from a perfect parallel and many health experts are quick to point out significant deficiencies, particularly in its focus on treating disease rather than prevention and well-being, for example. Nevertheless, health offers inspiration to inform our thinking.14

So, how does the health sector work to bridge the knowing-doing gap?

Our analysis reveals five characteristics of the health sector knowledge bridge which we judge to be absent or nascent in education. Yet, positively, we also see many of the building blocks needed to create a comparable Education Knowledge Bridge for education.

1. Research generation

While this white paper focuses on the use of research rather than its generation, it is inevitable that the volume and focus of research will have a significant impact on the likelihood of its future utilisation. In comparing health and education, three important differences stand out:

a. The health sector invests heavily in quality research.

Health research is often commissioned by public bodies. For example, the National Institute of Health (NIH) in the US invests US$32 billion annually in medical research.15 Research is commissioned through private sector investment by the pharmaceutical industry with research and development activity estimated at US$165 billion in 2018 for which there are significant commercial incentives.16 It is also funded by large health focused foundations such as the UK’s Wellcome Trust with an endowment of £26 billion (US$36 billion). The consequence is a vast body of evidence that is estimated to be growing at the rate of “75 trials and 11 systematic reviews per day”.17 It is difficult to identify comparable statistics for education research spending or outputs like those of health. There are certainly fewer incentives to encourage private sector investment. Education spending represents a significant part of national government spending around the world (5% of GDP is not untypical) and public funds account for the majority of education spending in many countries.2,18 However, this commitment rarely prioritises research about what works and under what conditions, and therefore how this money is best used. The Education Commission report addressing education financing stated:

“to keep investment focused on the reforms and practices that work best requires building systems that continuously seek out and act upon the best new information on what delivers results, including by increasing the share of funding that goes towards research, development, and evaluation … Today, most countries spend very little of their education budgets on research and development, and it accounts for just 3 percent of international aid in education. Education lags behind other sectors in the funding and institutions to support research and data.”3

Encouragingly, building blocks for a future Education Knowledge Bridge are evident, if still on a much smaller scale than in health. With a budget of US$75 million, the Knowledge and Innovation Exchange (KIX) has been established to meet global public good gaps in education. KIX brings together 68 low- and middle-income countries that are partners of the to identify common policy challenges and facilitate knowledge sharing and evidence building. 19 Meanwhile, Dubai Cares ‘E-Cubed’ US$10m partnership with the aims to strengthen the evidence base for education in emergencies by supporting contextually relevant and usable research and disseminating global public goods, fostering collaboration, and building community engagement.20

b. Health research is likely to be applied and user-centred.

When budgets are limited, it becomes even more important to ensure that the research that is commissioned is relevant. Health research is more likely to be applied, user-centred, and designed so that outputs can be directly linked to existing clinical pathways and protocols. New health research is likely to start with an understanding of a specific challenge or obstacle in the treatment of a condition, it is likely to be focused on filling gaps in current knowledge, and envisions application in an existing treatment pathway.

In contrast, education research is less likely to be focused on current problems of practice and policy and more likely to be “decided by researchers identifying questions of interest to them” 21,22 , or that they deem relevant.The education system rewards academics for publication in a journal, so this can be seen as the culmination of the journey. Where academics go further to engage practitioners in research, this usually happens at the end of the research process with the dissemination of findings, not at the start.

Again, there are encouraging initiatives in place. For example, , as championed by the , are a “systematic evidence synthesis product which display the available evidence relevant to a specific research question”.23 By identifying gaps where little or no evidence from impact evaluations and systematic reviews is available, it is possible to support a more strategic approach to building the evidence base for a sector.

have been combined to create mega-maps such as the recent Mega-map of systematic reviews and evidence and gap maps on the interventions to improve child well-being in low- and middle-income countries published by the Campbell Collaboration. This mega-map covered 333 systematic reviews and 23 .24 Such initiatives offer funders and academics greater insight into research needs and gaps, potentially also reducing the risk of wasting scarce resources through duplication and repetition of what has already been researched.

c. Health research is increasingly likely to reflect the needs of minority groups.

Over time, the health research community has recognised its inherent biases towards patients of a particular gender, age or condition – realising that equity can only be achieved when data is collected from, and reflects the differing needs of, all relevant groups and contexts.25

The question of “what works?” has steadily been replaced with “what works for whom, when, where and why?” with greater effort to increase diversity and meaningful participation in clinical trials.26,27

The vast majority of education research is conducted in contexts that are unrepresentative of most of the global population, limiting the likelihood of its findings supporting a meaningful equity agenda.28–30 Much like health has learned to investigate why some patients do not respond to a course of treatment, education also needs to disaggregate data for those with learning differences. The needs of marginalised or forgotten groups will remain poorly understood while research excludes them in the first place, or if they are lost in a mountain of data and described with an average.

There are encouraging innovations in the education sector. For example, the is a south-south partnership of organisations working across three continents. Member organisations conduct citizen-led assessments aimed at improving learning outcomes by, among other activities, generating data through oral one-on-one assessments conducted in households.

The Regional Education Learning Initiative () is another example, composed of 70 members in East Africa, promoting cross-organisational learning and exchange to improve education access and quality.

2. Synthesis

“Evidence synthesis refers to the process of bringing together information from a range of sources and disciplines to inform debates and decisions on specific issues. Decision-making and public debate are best served if policymakers have access to the best current evidence on an issue.”31

Without systematic and routine synthesis, practitioners and policymakers are faced with an overwhelming number of journals, papers, and reports, which in practical terms makes it impossible to be guided by evidence in any coordinated and balanced way. In comparing health and education synthesis, three important differences stand out:

a. The health sector has a comprehensive, sector-wide synthesis process.

In health, a sector-wide synthesis process means that doctors and policymakers rarely need to read, rate, and evaluate individual studies. Instead, they can draw on robust and comprehensive syntheses of evidence around specific, practice-focused themes, or have confidence that established treatment pathways will reflect best-in-class evidence.

These syntheses come from specialist organisations which conduct synthesis at scale. For example, Cochrane (see callout) provides 8,500 systematic reviews in its publicly available and searchable library at .

The Cochrane Library is the largest source of systematic reviews in health. Epistemonikos acts as a library of libraries, regularly drawing evidence from ten different organisations, including Cochrane, so as to “identify all of the systematic reviews relevant for health decision-making” in one place.32

The education sector has no systematic sector-wide process for synthesis at scale, or a central library of the results. According to Stephen Fraser, Deputy Chief Executive of the Education Endowment Foundation, “we lack a central convenor of systematically developed syntheses”. Most educational research remains isolated in individual journal articles, which are often one-off intervention studies, hidden behind paywalls. As Larry Cooley, Non-resident Senior Fellow, Center for Universal Education at Brookings explained: “In education, ensuring that the best available evidence is consolidated and acted upon is nobody’s job.”

While there is no systematic sector-wide process, there is smaller scale and high-quality synthesis work underway in the education sector. For example, the online Teaching and Learning Toolkit has synthesised and continues to update evidence about 35 school-based interventions.33 This highly accessible and intuitive resource has encouraged 64% of UK school leaders to use evidence to inform decisions about allocating special government funding for disadvantaged students, up from 36% who used research in 2012.34 EEF has been working with partners in other countries to create comparable toolkits internationally. For example, SUMMA has translated the toolkit into Spanish and Portuguese, and synthesised the evidence base for teachers in Latin America and Caribbean countries.35

There are other important building blocks in the education sector:

▶ The Campbell Collaboration, a sister organisation of Cochrane, produces syntheses and policy briefs across a wide range of social science topics, including education. It has produced an average of three internationally focused K-12 education syntheses each year over the past five years and has been working in partnership with 3ie on Evidence Gap Maps.36

▶ The EPPI-Centre at the UCL Institute of Education in London has several decades of synthesis experience across many areas of social policy including education. However, in recent years its output has focused on broader social issues and it has not published any specific K-12 education syntheses.37

▶ The Institute of Education Sciences and the What Works Clearinghouse conduct “what works” reviews of specific interventions (packaged programmes) in a US cultural context.38

▶ The Harvard Center on the Developing Child and the annual GEM Report from both focus on synthesising knowledge around very specific themes (e.g., toxic stress and executive functioning, in the case of Harvard; inclusion, in the case of the 2021 GEM Report). They have excellent outreach capabilities to engage wider networks in disseminating their materials.39,40

These examples are important building blocks, but they are not sufficient to bridge the knowing-doing gap. Even when their results are combined, they confirm the small scale of synthesis work in the education sector, relative to the need. Furthermore, there is no single place where the work from these different organisations comes together in a form that is easy for policymakers and practitioners to find and engage.

b. Health sector syntheses are timely and regularly updated as new evidence becomes available.

In health, a systematic review is a living document. Old reviews are routinely updated as new evidence becomes available. Cochrane produced 560 new or updated reviews in 2019, stating:

“Cochrane Reviews are updated to reflect the findings of new evidence when it becomes available because the results of new studies can change the conclusions of a review. Cochrane Reviews are therefore valuable sources of information for those receiving and providing care, as well as for decision-makers and researchers." 41

Handling the volume of new research in health is a significant challenge. Cochrane uses a network of 21,000 “citizen scientists” to identify the research that may be relevant for future synthesis, which it catalogues in a central register.42 Artificial intelligence techniques have further potential for improving the reliability of tracking new and emerging evidence with the potential to be included in systematic reviews.

In education, it is rare to find important synthesis routinely updated. For example, What Works Best in Education for Development: A Super Synthesis of the Evidence produced by Australia’s Department of Foreign Affairs and Trade draws together research up to 2016, but has not been updated since then.43 3ie’s systematic review of Interventions for improving learning outcomes and access to education in low- and middle-income countries has not been updated since 2015.44 Notable exceptions include the EEF, which ensures “repeated systematic searches … for systematic reviews with quantitative data” in its toolkit, 45 and the Harvard Center on the Developing Child, which routinely updates material on its core topics.

c. The health sector has developed sophisticated synthesis methodologies and is increasingly incorporating broader types of evidence.

Synthesis is a complex undertaking, fraught with methodological issues and potential bias. A systematic review “should follow standardised processes to ensure that all practically available relevant evidence is identified, considered, rigorously assessed, and thoughtfully synthesised”.46 The health sector has championed more rigorous approaches to the process of synthesis:

▶ is a “transparent framework for developing and presenting summaries of evidence and provides a systematic approach for making clinical practice recommendations”.47 Used by more than 100 organisations worldwide, GRADE allows the quality of evidence and the confidence in recommendations to be assessed on a consistent scale. The padlock symbol used in the EEF’s Teaching and Learning Toolkit is an example of a confidence scale being used by one organisation working in education.33

▶ is a quality assurance tool for systematic reviews designed to promote transparent reporting. It includes a 27-item checklist addressing the introduction, methods, results, and discussion sections of a systematic review report.48

Due to the greater volume of health sector research, it is inevitable that systematic reviews find a greater volume of eligible research. However, in other fields such as education, where research can be sparse, alternative methodologies such as “subject-wide evidence synthesis” are gaining interest.49,50 Approaches that embrace a wider range of methodologies and sources are likely to have growing relevance. For example, - addresses the need to assess confidence in qualitative research.51

"People need to make a decision even if we don’t have an RCT. We need to help them make the best decisions based on all available information.” — Alexandra Resch, Director of Learning and Strategy, Human Services Research, Mathematica

"It is critical for investments in research to illuminate what works, what does not work, what may be harmful, and the core ingredients of effective approaches and interventions.” — David Osher, Vice President and Institute Fellow, AIR

One type of evidence that is underrepresented in both health and education synthesis is research about what does not work. To avoid repeating history’s mistakes and to learn from experience, more transparency is required in all sectors.

3. Guidance

With comprehensive and timely synthesis available, it becomes possible to turn synthesis conclusions into evidence-informed guidance, written in ways that are accessible and relevant to policymakers and practitioners. In comparing health and education guidance, two important differences stand out:

a. The health sector has infrastructure and well-established processes to create and disseminate guidance.

Health syntheses routinely include “implications for practice” in their conclusions. The health sector systematically embeds the conclusions of synthesis into treatment recommendations (known as critical pathways), prescribing guidance, purchasing decisions and regulations.52 This can be found at all levels. For example:

▶ the World Health Organisation issues “global guidelines ensuring the appropriate use of evidence in health policies and clinical interventions”53;

▶ national policy institutes, such as the UK’s National Institute for Health and Care Excellence, make “evidence-based recommendations developed by independent committees, including professionals and lay members, and consulted on by stakeholders”, which guide the work of health practitioners across a country.54

Many professional bodies at national and international levels focus on specific health conditions or themes and provide specialist expertise in the review of emerging evidence, scientific leadership and workforce development.55,56

“Evidence is necessary but not sufficient – we are not equipped (in education) to support the practice shifts and uptake." — David Osher, Vice President and Institute Fellow, AIR

With less research to draw upon and without a systematic synthesis process to shape conclusions from the research that does exist, educational policy development is often a far less predictable and rigorous process.

Education systems (that is, everything that goes into educating school students, including laws, policies, funding and regulations) are more likely to oscillate between stagnation and dramatic change in direction as they fall victim to political interventions rather than continuous improvement. According to the , looking at some of the best resourced countries in the world, “many countries lack effective mechanisms to strategically integrate data and educational research into the process of evidence-based resource planning” and demonstrate “systematic weaknesses in the ability to use data and research evidence can appear at every level of governance”.57

Yet, building blocks are emerging. The co-hosted by the UK Foreign, Commonwealth & Development Office and the World Bank is composed of economists, educationalists, psychologists, and policymakers, and has released its first recommendations on “smart buys” in education for low- and middle-income countries.58,59

b. Health sector guidance seeks to strengthen the current system and practice.

In health, new evidence is constantly used to improve the current system. The sector evolves over time through tweaks to strengthen current practice rather than by adding entirely new or parallel systems. If a doctor wants to test a new idea or potential treatment, then there are clearly defined mechanisms to ensure transparency, patient safety, ethical approval, trial delivery, and results publication, all within the mainstream system.

In education, teachers usually lack any similar support for innovation. While they sometimes enjoy greater autonomy in the classroom, the likelihood of innovation by any single teacher causing improvements in the overall system is too low. Education organisations such as ISKME – a non-profit whose mission is to improve the practice of continuous learning, collaboration, and change in the education sector – are doing important work to help schools collect and share information, particularly through .60

Meanwhile, the search for silver bullets in education sometimes causes pilots to be established, running alongside or parallel to the formal education system. An increasing body of scaling experience argues that these “parallel pilots”, that do not engage with the reality of the mainstream system, are likely to drain resources and create unsustainable results.61

4. Implementation support

With evidence-informed guidance in place, it becomes possible to focus on the challenges of implementing changes within a system. In comparing the capacity of the health and education sectors to do this, two important differences stand out:

a. The health sector invests funds in technical assistance and capacity building to implement research findings at the country level.

Research in the health sector has established that “passive approaches for disseminating (evidence) are largely ineffective because dissemination does not happen spontaneously” and “too often, capacity-building efforts have been built around pushing out research-based evidence without accounting for the pull of practitioners, policy makers, or community members or accounting for key contextual variables (e.g., resources, needs, culture, capacity)”.62

The health sector creates demand in a number of ways. For example, through the pre-service training of practitioners, through standards for professional accreditation and through the obligations of continuous professional development.

The programme was a £16 million initiative funded by the UK Department for International Development (former DFID, now FCDO) to build capacity for health evidence use across 12 low- and middle-income countries in Africa and Asia. It concluded that “individual capacity (in terms of knowledge, skills, confidence and commitment) is the bedrock of effective evidence use, but programmes also need to harness organisational processes, management support and wider incentives for people to change ways of working”.63

In the education sector, the building blocks are once again evident:

▶ The holds the UN mandate to support educational policy, planning and management. IIEP is “committed to creating and sharing knowledge to support context-relevant analyses to improve educational policy formulation and planning. Training, technical cooperation, applied research and knowledge sharing are the four main activities through which IIEP accomplishes its mission”.64

▶ The helps countries “unlock existing data to expand access to education and improve learning for all. DMS provides direct technical assistance in Chad, Madagascar, Namibia, Nepal, Niger, the Philippines, Togo and Zambia”.65

▶ In a strong example of private philanthropy and government stakeholders collaborating to improve policy delivery, UBS Optimus Foundation provided funding to the in Sierra Leone to undertake a systems-level analysis of education service delivery, with an emphasis on identifying the disconnects between policy design and implementation.66 The report supported the development of Sierra Leone’s Education Sector Analysis preceding the new Education Sector Plan (2020-2025), a proposed restructure of the MBSSE, and the establishment of a delivery unit.

b. The health sector invests in learning networks so that implementers can systematically learn about how to implement evidence in practice.

For example, the is “an innovative, country-driven network of practitioners and policymakers from around the globe who co-develop global knowledge products that help bridge the gap between theory and practice to extend health coverage to more than 3 billion people” in 34 countries.67

Such networks are not unique to health, although perhaps it is difficult to point to activities at the scale of the (yet). Strong building blocks in education include:

▶ the Millions Learning Real-time Scaling Labs, initiated by Brookings, which aim to “strengthen scaling efforts through a forum for peer-to-peer learning in which lab participants discuss lessons learned and develop strategies to address challenges faced during their education interventions’ scaling journey”68;

▶ the four Regional Networks (AfECN, ARNEC, ANECD and ISSA), which are membership associations that act as regional learning communities, bridging the policy and practice domains to “challenge existing knowledge and practice, and co-construct new approaches and models”69;

▶ the Saving Brains Learning Platform, which is a learning community of more than 100 NGOs using evidence-based approaches to scale early childhood development, supported by a number of private foundations who understand the value of peer-based learning and networks to support change and scaling.70

In both the health and education sectors, implementation research is a growing area of study which is fundamental to understanding evidence use.

Implementation research seeks to better understand the real-life challenges of “implementation—the act of carrying an intention into effect”. Embracing real world challenges and complex contextual issues, it is an approach that focuses on the users of research rather than the producers. Methodologically, it can utilise a wide variety of qualitative, quantitative, and mixed methods techniques that seek to better understand issues including acceptability, adoption, appropriateness, feasibility, fidelity, implementation cost, coverage, and sustainability.71,72

5. Enabling environment

In reflecting on the health knowledge bridge, it becomes clear that its strengths are explained by something greater than just institutions and processes. The health sector has created an enabling environment – a culture of evidence use – that pervades its work. In comparing the health and education environments, three important differences stand out:

a. The health sector strives for independence and transparency in research and synthesis.

In sectors with high levels of commercial and other stakeholder self-interest, a key aspect of building trust in evidence relates to the independence and transparency of research and syntheses. Where independence is potentially compromised (as in a pharmaceutical company sponsoring research into its own product), then transparency becomes even more important. For these reasons, and with no lack of recent controversy to spur greater efforts, the health sector has created high expectations for managing conflicts of interest in research and for syntheses processes that seek to be open and transparent at every stage.73

Central to health syntheses process is the engagement of large numbers of health professionals from numerous organisations acting in a volunteer or supporter role. This helps with transparency, creates higher levels of buy-in, and distributes costs across and around the sector, so that central financing is not an over influential factor in determining what gets synthesised and when.

In education too, it is possible to see certain stakeholders exhibiting a significant influence on what gets researched and when. For example, research is sometimes commissioned by donors around specific agendas, which are linked to individual interventions also funded by the same donors. Synthesis, when it happens, is often commissioned as a one-off study from a university department, think tank, or private research institution, with little transparency. The education sector has not yet matured the same level of expectations in regard to independence and transparency that are evident in health. Both sectors, no doubt, have further to travel.

“Donors often influence the evaluations of the interventions they have funded and may promote costly proprietary tools or one organisational view.” — Manos Antoninis, Director, UNESCO GEM Report

b. The health sector works to align incentives and culture between evidence creators (researchers) and evidence consumers (health practitioners).

Doctors are immersed in a culture of research from very early stages in their medical training. Many hospitals have specialist facilities for conducting research and trials, and contributing to clinical trials is understood to be a shared responsibility and opportunity for all who work in the sector. Ethics committees and other research infrastructure routinely exists to support this happening.

“For public health practitioners to apply the latest scientific evidence, they need to be connected all along the research production-to-application pipeline and not just to the end of it”.62

The division between hospitals and universities is much less stark than between schools and universities. Teaching hospitals and the work of academic practitioners mean that professionals routinely cross between these worlds, sometimes several times in a day. Various incentives also encourage engagement in research. For example, hospitals can earn additional income by hosting and facilitating clinical trials for pharmaceutical companies, so that many have specialist research and development departments making this an everyday and mutually beneficial part of their work.74

Schools and teachers, on the other hand, have fewer opportunities to learn about evidence in their training, conduct research less frequently, and usually lack the facilities and/or resources to do so. Among other issues, this means that mountains of data held by schools and teachers (e.g., relating to student assessment) remain siloed in schools and are rarely analysed to help improve practice and performance in the wider system.

c.The health sector has dedicated translation specialists.

Allied to the health sector are professions that focus on the translation of science into practice. For example, medical writers specialise in writing regulatory and research-related documents, disease or drug-related educational and promotional literature, publication articles such as journal manuscripts and abstracts, and content for healthcare websites, health-related magazines and news articles. To do so, they need an understanding of key medical concepts and the ability to communicate scientific information to suit the different levels of understanding of specialist and lay audiences.75

In the education sector, it is common to find education journalists who write about the sector, but an education sector equivalent role of the medical writer is difficult to find. Without it, too much valuable evidence remains hidden.

Individually, these differences between the health and education sectors are important but not necessarily dramatic. Yet, by ensuring capacity and good practice at every stage of the process (from knowledge generation through to implementation support, facilitated by an enabling culture), the health sector ensures that the knowing-doing gap is bridged.

The health sector knowledge bridge has taken decades to develop, and it continues to evolve. An equivalent Education Knowledge Bridge will inevitably look and function differently, but this analysis shows that many building blocks already exist. Urgent action and greater persistence are required to strengthen and connect these building blocks into a functioning Education Knowledge Bridge.

“It is surely a great criticism of our profession that we have not organised a critical summary, by specialty or subspecialty, adapted periodically, of all relevant randomized controlled trials.”76 — Archie Cochrane

These words were written not today, but in 1979. The profession being critiqued was not education, but health. It is a reminder that, in the last 40 years, the health sector has taken huge steps to bridge the knowing-doing gap.

Archie Cochrane’s quote was the inspiration that led to the medical profession building a database of systematic reviews of research. The impact on policy and practice has been transformative. Doctors and policymakers no longer need to read countless individual studies and reports, but instead can refer to guidance based on authoritative, independent synthesis of multiple studies which give clear advice about the effects of clinical options on prevention, treatment and rehabilitation. The result is that the prescription of drugs or surgical interventions no longer need to be a lottery dependent on the personal experience of an individual doctor.

Yet, the quote reminds us that it was not always so. It was in 1993 that the Cochrane Collaboration was established as a public good, supported with a grant from the Department for Health in England, 14 years after Archie Cochrane’s call. The initiative took many more years to develop, starting with less than a hundred reviews in 1995, scaling to more than 8,600 today. The global collaboration now has over 100,000 supporters and members in more than 130 countries who tackle the constant challenge of producing, analysing and synthesising new research, then translating it into health evidence and high-quality trusted information that can be used by policymakers, practitioners, researchers and consumers, patients and the public to inform health decision-making.77 This is a community-driven effort: Cochrane produced 560 new or updated reviews in 2019 with a central budget of only £9.1m (US$12.5m).25

These reviews help to shape sector policy.78 For example, it is estimated that 90% of 2016 WHO guidelines contain Cochrane evidence.79 Trust in the process is critical and not without some controversy. Cochrane itself “does not accept commercial sponsorship or conflicted funding” and implements a conflict of interest policy that has been described as “stricter than most journals”.73,80 In addition, there is continual methodological innovation in the process of synthesis itself.48,50

The logo of the centre is a powerful reminder that evidence, only when used, transforms lives. It shows the results of seven randomised control trials (RCTs) for the use of an inexpensive drug given to women about to give birth too early. Had the studies been systematically reviewed, then it would have been clear by about 1982 that a simple steroid injection matures the baby’s lung to improve their survival outside of the womb, reducing the odds of death by 30–50%. As no systematic review was published until 1990, most doctors had not realised that the treatment was so effective, and many premature babies probably suffered or died unnecessarily.

Cochrane goes further than the production of synthesis for medical specialists. It (and many others in the sector) seeks to communicate its work to make it truly accessible, recognising the interests of policymakers, journalists and patients, plus the need to counter out of date or inaccurate information. For example, since 2014, Cochrane and Wikipedia have partnered to help medical editors transform the quality and content of health evidence available online, using new and updated Cochrane Systematic Reviews.81

3. Mind the gap: The challenge for education

Inspired by the idea of a knowledge bridge and the examples from health, Education.org explored opportunities and challenges by studying leading initiatives and actors from the most influential knowledge organisations in the global education sector.

Analysis and interviews consistently confirm the absence of a functioning knowledge bridge in education.

“There is a tremendous amount of data and research that is not accessible and heavily under-utilised. We would all benefit from efforts to routinely surface this information and to make it more accessible and useful for leaders influencing education decisions.” — Andreas Schleicher, Director for the Directorate of Education and Skills, OECD

“The sector is driven mostly by supply, not demand. Many researchers are not thinking about how their research will be used, and there is little accountability for that.” — Leader of a global knowledge organisation

“After two years of rigorous work for a systematic review, we discovered by accident a similar effort underway by a fellow actor in the field.” — Birte Snilstveit, Director Synthesis & Reviews and Head of London Office, International Initiative for Impact Evaluation (3ie)

“We often say that we know what works, but we don’t know. We know what might.” — David Osher, Vice President and Institute Fellow, AIR

“No program evaluation my team has completed has been applied to a next project.” — Lisa Petrides, Founder and Chief Executive Officer, ISKME

“There is a massive amount of (country level) data not being used, mainly due to a lack of time and resources.” — LeAnna Marr, Acting Deputy Assistant Administrator, USAID

“Unrestricted funding is increasingly limited in the development sector. So, the questions that are being addressed are often driven by donor interests, rather than governments in low- and middle-income countries.” — Senior leader in a global research organisation

“Major agencies and donors dominate the agenda; the voice of countries is clearly not represented adequately.” — Manos Antoninis, Director, UNESCO GEM Report

“Too often, we extract information from non-profits and communities for evaluative purposes, only to keep the learning to ourselves, or perhaps share it with a few like-minded peers… When we choose not to share what we are learning from evaluation, we not only impede the efficiency and effectiveness of the sector, but also fall short of our responsibility to the communities we serve.” — Funder and evaluator for the Affinity Network (FEAN)82

“The extra step of synthesis is needed for the institutionalisation of evidence.” — Howard White, Chief Executive Officer, Campbell Collaboration

Why is this? These quotes and our wider interviews point towards three important communities in the education sector, enabled by supportive donors, who lack the infrastructure and processes to collaborate effectively:

- The academics, researchers and thinkers who help the education sector to understand what is happening and why.

- The policymakers and system managers who determine direction, set policy and allocate resources.

- The practitioners and doers – the teachers, headteachers, assistants, community workers, parents, civil society organisations, NGOs, and others – who make education and learning happen in schools, communities and homes.

Given that all three communities are full of professionals who are talented and passionate about their work, why does evidence not flow more effectively between them? Specifically:

▶ How do these three communities communicate? For example, how do they share evidence, agree priorities and ask questions of each other?

▶ What are the incentives and cultures that encourage or inhibit collaboration?

Image 2. An Education Knowledge Bridge

There are certainly worthy exceptions to this broader picture, but generally these three communities are divided by different cultures and incentives.

Each works in isolation, but when these communities need to collaborate, they lack the infrastructure, incentives and culture to bridge the gap.

For example, when researchers communicate evidence through journal articles, policymakers struggle to ingest and digest the volume of diverse and sometimes conflicting information and to translate the evidence into policy. Practitioners find it challenging to relate the relevance to their classroom context.

What does this mean? How could the sector change?

Our interviews with sector leaders suggest what might help.

"Research papers often start with reviewing the current state of evidence. Of course, context and needs are critical too. There is scope for much more co-creation at the design stage that is rooted in context and can be guided by evidence.” — John Floretta, Global Deputy Executive Director, J-PAL

“Much synthesis work today is ad-hoc and uncoordinated. The good news is that there is increasing demand for synthesised evidence. But it needs brokering and coordination, to be effective … We need coordinated curation and translation of research, not just another archive.” — Birte Snilstveit, Director Synthesis & Reviews and Head of London Office, 3ie

“We need to be able to better access and use the data we have and reduce the duplication of research efforts.” — Luis Benveniste, Human Development Regional Director for Latin America and the Caribbean, World Bank

“It is rare to put country needs first when donor-commissioned studies are being formed, despite good intentions. Even rarer are efforts to identify and acknowledge national and local research and experiences. It would be most welcome if our sector were to re-prosecute what we mean by ‘evidence,’ and to strengthen our mechanisms for routinely listening to and elevating the voices of communities.” — Sara Ruto, Chief Executive Officer, PAL Network and Chairperson, Kenyan Institute of Curriculum Design

“We need to do more to get reviews commissioned with a clear intention to inform decision making. Doing that means producing reviews which shift beyond the general first-generation question of if an intervention works or not.” — Howard White, Chief Executive Officer, Campbell Collaboration

“We need to broaden our view of stakeholders to include both power-holders – those who make decisions about policies and programs – and those who are likely to be affected by the decisions.” — Ruth Levine, Chair, Board of Commissioners, 3ie

“Researchers have insufficient incentives to do research on the most important education challenges facing the world’s poorest people. Funders could be highly successful in generating a different process.” — Manos Antoninis, Director, UNESCO-GEM Report

“A big difference with medicine is that this evidence is part of your identity. Literature has been embedded in you since starting practice.” — Gina Lagomarsino, President and Chief Executive Officer, R4D

“While we have been effective in making the case for curriculum reform and formative assessment, the approved new curriculum lacks targeted focus on enhancing equity and implementation is not smooth. There is very little feedback or learning during the implementation process.” — Emmanuel Manyasa, Executive Director, Usawa Agenda

On discovering the extent of the knowing-doing gap, it might be tempting to assume that we need much more research to fill it. Indeed, it is rare to find an academic paper that does not say as much.

But this investigation suggests that it is not the lack of research that is the greatest obstacle to progress, but the failure to use what we already have.

“If more time were spent figuring out how to apply what we’ve already learned, and less time on conducting nuanced research around the edges, many millions of children around the world could benefit and no harm would be done.” — Jack Shonkoff, Director of the Center on the Developing Child, Harvard University

We need an Education Knowledge Bridge to span the knowing-doing gap.

4. Building a bridge to span the knowing-doing gap

Our investigation suggests that a new Education Knowledge Bridge requires five capabilities:

1. Research generation: promoting use and user orientation from the outset

The core argument of this white paper is to make better use of the research that we have. But research generation also remains a challenge, especially in the education sector where quantity, quality and relevance all need to be strengthened if an Education Knowledge Bridge is to have the inputs needed to support change. Indeed, the availability of comprehensive synthesis would enable researchers to focus towards filling genuine evidence gaps, and stronger involvement of policymakers and practitioners in the early stages of research would increase the likelihood that scarce resources are used to tackle the most important challenges.

2. Synthesis: building a big picture with the jigsaw pieces

Extending the focus of policymakers and practitioners from individual studies to comprehensive syntheses is essential. Shifting the frequency of syntheses from one-off to routinely updated is equally important.

The education sector needs a Cochrane-like capacity so that future generations will find it hard to believe that we ever lived without the capacity for comprehensive, up-to-date, contextually sensitive synthesis of research in education.

“We all have this as our interest, but none of us has it as our mandate. This initiative would be a rising tide that could lift all boats.” — Rebecca Winthrop, Co-director, Center for Universal Education and Senior Fellow, Global Economy and Development, Brookings

3. Guidance: answering the “so what?” of every synthesis

Synthesis is essential but not sufficient. The third part of the bridge is the capacity to develop evidence-informed guidance and recommendations derived from the synthesis. The process for developing guidance needs to be as robust as that for developing synthesis. The guidance and recommendations must be relevant and clear for the target audiences, concrete and realistic for the context in which they are targeted, and aware that cost is a policy reality that must also be considered.

4. Implementation: engaging for and supporting change at scale

Turning guidance into policy and practice requires improvement in the capacity to implement change. It needs coordinated support from global players and local actors, bottom-up as much as top-down. Implementation plans must engage stakeholders with clear messages and effective communication. They must be designed with opportunities for rapid learning and adjustment in mind, firmly aware that every new context has huge implications for even the most established model. It is essential to resist the trend of implementing interventions without a critical assessment of their appropriateness and adaptation for the specific context.

“A perfectly designed policy does not exist. For instance, a policymaker may use top-notch economic knowledge or international best practices to bring about a new incentive mechanism that improves teacher effectiveness. However, critical adjustments that take account of country-specific institutional and managerial contexts can only be made during implementation. That is why a good design must be paired with a mechanism to assess if implementation is following the right course, identify and measure impact, and improve the policy over time. That capacity to learn and adapt comes with time, but it is absolutely critical.” — Dr Elyas Abdi Jillaow, OGW, Director General for Basic Education, Ministry of Education, Kenya

5. Enabling environment: building a culture of evidence use

Interviews and analysis suggest that adopting new tools and processes is not enough. How we do it matters too, especially in education where the culture and approach is distinctive. The interviews identified five principles to be embedded in the design of the bridge, essential to creating the enabling environment:

a. Being user-centred – rather than focusing on theory

A critique made of research in our interviews is that, despite best intentions, it is often driven by the interests of academics and the agendas of donors, rather than the needs of practitioners and policymakers. Being user-centred would involve bringing those who will utilise evidence in their decision-making into the design process from the start, prioritising issues of most relevance to their current problems of practice and policy, making sure that recommendations are context-specific, and using a communication style that is simple and actionable. Engaging a wider range of voices and recognising the importance of elevating data and experiences from varying contexts are also important elements of prioritising the needs of users over theoretical considerations alone.

“We need to balance incentives between creation and use of knowledge.” — Director of a national education research organisation

“The current model of education knowledge is centred on evidence generation, not use. Users should not be passive recipients but active co-producers of knowledge, as we have learned from health. We need much, much more listening, and we need to adopt tools for synthesis and for transfer of evidence.” — Gina Lagomarsino, President and Chief Executive Officer, R4D

b. Reinforcing the core education system – instead of establishing parallel tracks

An observation regularly made, especially by policymakers, is that too many innovations seek to bypass or sit parallel to the mainstream education system and planning processes. This limits their scalability and sustainability. It can mean that resources are diverted. Several knowledge actors interviewed for this paper further stressed that the trend towards research on silver bullets, or “what works,” is misleading and creates false narratives. Instead, it must be a principle to use evidence to build permanent infrastructure and continuous improvement of the mainstream education system rather than adding ad-hoc, one-off efforts.

“We often create interventions that sit on top of (not part of) what is already happening. It’s impractical, costly and therefore fails often.” — Leader in a national research-based advocacy organisation

“We don’t motivate continuous improvement. When new research is generated, it should be directly embedded within an existing policy or practice.” — Alexandra Resch, Director of Learning and Strategy, Human Services Research, Mathematica

c. Safeguarding independence – instead of being driven by funding biases

To make a difference, synthesis and recommendations must be trustworthy. Credibility derives from a process that is independent, transparent, and non-partisan at every stage. If synthesis processes are tied to proving the effectiveness of specific interventions, projects, or themes, especially through the enthusiasm of a donor utilising restricted grants, then credibility and trust in the entire process is placed at risk.

d. Leveraging networks – instead of reinforcing silos

Ultimately, change depends on many actors coming together. Much of the synthesis process in health is led by Cochrane, made feasible by 82,000 volunteer members who are typically medical professionals drawn from numerous organisations and networks. Their engagement in the Cochrane synthesis process is mutually beneficial and gives further amplification and credibility to the results. The same principle is true when delivering change programmes. Success is supported and reinforced when networks of collaborators span academia, policy, practice, business, philanthropy, social entrepreneurs, unions, and civil society sectors. As we are reminded by 17 (“Revitalize the global partnership for sustainable development”), we need stronger, interconnected broader networks and new alliances to make this bridge possible.

e. Prioritising equity – avoiding averages

The greatest risk of failure in Our World’s school report relates to marginalised children such as girls, minorities, those in poverty, and children with learning differences and special needs. We have an even greater responsibility to use evidence to support children at greatest risk. This starts with the way in which we frame research questions, collect data and synthesise evidence. Only by collecting data that is representative of the diversity in our communities, that avoids unintentional biases in its design and methodologies, that disaggregates results recognising that “no one is average,” and that constantly places an emphasis on understanding what is working for whom and in what context, will it be possible for school systems to improve outcomes for those who need quality education the most.

“There is increasing evidence that in order to increase research use, we need to do research differently: by involving the beneficiaries of the research from the onset, through co-creation and co-implementation design.” — Mathieu Brossard, Chief of Education, UNICEF Office of Research – Innocenti

5. An open invitation to engage in bridge building

During the research leading to this white paper, COVID-19 swept across the globe. The health sector responded quickly on multiple fronts, testing, collecting data, utilising existing R&D protocols to develop vaccines, refining treatment guidance, and increasing system capacity rapidly. While there have been numerous failures and policy mistakes, rapid improvements in treatment protocols and survival rates were achieved globally in only a few months as the health sector used its well-established knowledge bridge to learn and adapt, while simultaneously treating patients.

In contrast, education systems experienced the largest closure of schools in history, an absence of data on educational impact for children in the context of growing inequity, ad-hoc distance learning provided to a minority with little evaluation of quality, and policy confusion over good practice for reopening with serious implications for the most marginalised. During a crisis, the stark differences between health and education have become even more evident.

As Ian Chambers of Cochrane observed when reflecting on the progress made in health over four decades: “The most important thing to keep in mind is that it is no longer acceptable to try and guess what the evidence says about particular forms of care.”

How long before we can say this about education too?

If we are to improve Our World’s education performance, then we should seize the opportunity to connect and build on existing examples and current initiatives to create the kind of knowledge bridge that has transformed the health sector over the past forty years.

This is not a quick fix, but a systemic change in incentives, investments, infrastructure, culture and capacity to create an Education Knowledge Bridge to span the knowing-doing gap.

Image 3. Open invitation to bridge building

An effective Education Knowledge Bridge will:

- Enable better utilisation of the evidence and resources that have already been paid for, but sit largely unused.

- Make smarter use of scarce funding, particularly by identifying the greatest needs and most significant gaps, so as to avoid duplication.

- Encourage a democratisation of the culture around evidence and front-line voices, which are often missing in decision-making today.

- Contribute towards the goal of creating stronger, more equitable education systems, better supporting learning outcomes at scale, especially for marginalised groups (girls, minorities, those in poverty, children with learning differences or special needs).

- Allow us to move faster and respond better, especially in times of crisis.

How can we collaborate to build the Education Knowledge Bridge faster? We call upon:

- national and local education leaders to invest resources in the capacity, culture and cultivation of political will that is required to use the growing volumes of evidence in decision-making. Developing effective national research centres that are tightly linked to education planning cycles and long-term national policy agendas is essential if evidence use is to become routine and systematic. National commitments in this area will enable governments to make stronger demands on the global community for context-sensitive investments in research and processes that enhance the accessibility and use of evidence for decision-making.

- existing institutional knowledge actors in the education sector to take actions to increase the accessibility and use of knowledge products for policy and implementation. This collective action will lift everyone’s work. The building blocks of the Education Knowledge Bridge already exist, but it is only through collaboration that we can connect the pieces and work effectively as an education sector.

- donors and research commissioners, including private foundations, multilaterals, bilaterals and businesses:

- to address the significant contradiction between what research is funded and what research is used, by advancing incentives across the sector to focus more on evidence use. In particular, to address challenges of utility from the outset by modifying processes for commissioning new research to respond to the calls and ideas captured in this white paper; and

- to contribute expertise and facilitate connections with their networks to enhance capacity for evidence use. These ideas have enormous potential for boosting impact but depend upon insightful donors to take risks and stimulate more innovation.

- individual academics and researchers to start every new research study not just with a literature review, but with an investigation of the challenges and issues faced by policymakers and practitioners. Routinely engaging policymakers and practitioners as partners is much more likely to lead to research that is relevant, valuable and used.

- teacher organisations and teacher training colleges to help teachers strengthen their evidence literacy, that is, becoming skilled in the practical application of the findings of the best available current research in everyday practice. This is a change that will not only boost outcomes for students, but will help to lift professional standing for the teaching community.

- community voices and NGOs to advocate for high-quality, evidence-informed decision-making that reflects local needs, especially those of the most marginalised groups. It is essential that community voices and NGOs speak out to ensure that invisible groups and ignored data are better reflected in decision-making processes and the commissioning of research.

- teachers and school leaders to make frontline experience visible and contribute insights from every classroom to help set the research agenda and break down the academic-practitioner divide.

- media to use its skills in translating complex ideas to help ensure that the most important information reaches those who need it the most in forms that are easily understood.

We call upon everyone – parents, families, learners, and citizens – to support and participate in the revolution to make education a science-based sector, so that all children have access to a good quality education.

6. Education.org’s contribution

Education.org is an independent non-profit foundation, working to advance evidence and improve education for every learner. Education.org is committed to a world where the best evidence guides education leaders to improve education for every learner. Our mission is to build resources for education leaders worldwide, by synthesising and translating an inclusive range of evidence. We build bridges between knowledge actors, policymakers and practitioners in support of those who make education happen, from pre-primary through to secondary school. We differ from existing initiatives as we focus on the use rather than the creation of evidence.

We are a young foundation with a bold vision, eager to make a unique and positive contribution. By calling out the challenges and ideas in this white paper, we seek to build on existing initiatives and broker new relationships to accelerate change in the way that education systems use knowledge to boost quality and equity for all. Our voice is not one of an external critic, but of an active partner – a benevolent disruptor and eager bridge-builder working across education systems.

Education.org is supported by an early-stage visionary co-investor collective and partner network. In collaboration with governments, agencies, NGOs, universities, think tanks, media, businesses, and foundations, we are working towards three overarching goals:

- Create accessible and actionable knowledge to support decision-makers.

- Heighten the relevance of evidence, by being inclusive in the range of sources and voices that are typically left out.

- Improve the capacity, infrastructure, and culture for evidence-informed policy and practice at global and country levels.

We are inspired by the strength of the health sector knowledge bridge and motivated to accelerate a similar revolution in education.

Education.org’s Contribution to the Education Knowledge Bridge.

While the knowledge bridge in health has taken nearly forty years to develop, we see ways to leapfrog steps in this process. By learning from proven models in health and building on what we already have in education, we have the potential to create a fully functioning Education Knowledge Bridge in the next ten years.

This ten-year mission starts now – with our first three contributions to this sector journey:

- This white paper: An open invitation to engage in bridge building.

- Education.org: An open-access and inclusive synthesis resource for the sector.

- Culture and capacity strengthening: With the support of the Education.org Global Council, improving the sector’s incentives and infrastructure to use evidence for problem-solving.

1. This white paper: an open invitation to engage in bridge building

There is a tremendous amount of evidence that is unused, which could increase education access and outcomes. In this white paper, we raise the challenges of bridging the knowing-doing gap, and invite collective and coordinated improvements across education system actors and influencers. We are ready to support, to connect and to help align activities, but sustainable change can only be achieved through the concerted actions of individuals and organisations moving towards this shared goal through their own ongoing work and mission.

2. Education.org: an open-access and inclusive synthesis resource for the sector

To support evidence-informed decision-making and implementation in education, we are launching Education.org, a global public resource of synthesis for the education sector.

These syntheses will incorporate evidence that includes, but goes beyond, classic journal-based research, such as unpublished reports, programme evaluations, agency briefs, policy papers, conference reports, and materials in multiple languages, for selected education topics. To do this, we need a quantum leap in the way we think about and robustly use evidence in education. We will therefore convene an international working group comprised of leading education actors and innovative voices that are not typically represented within the education knowledge space. The group will construct a framework to categorise a wide range of published and unpublished sources (highly cognisant of both quality and inclusivity issues) and an approach to guide this nascent movement to dramatically enhance the range of participants and evidence involved in education dialogues, so that evidence becomes more relevant and timely.

In time, with an expanding network of Education.org supporters, volunteers, and partners, we aim to dramatically increase the number of quality and actionable education syntheses, and the frequency with which they are updated.

To get us started, we propose that the initial synthesis investigations will focus on three critically important framing questions raised by sector leaders:

- How can we ensure that the best available evidence around accelerated and catch-up learning guides the development of post-pandemic learning recovery policies and practices?

- For the six out of ten children in school but not effectively learning, what can we learn from the most relevant advances in neuroscience, human development and learning sciences to reverse this trend and accelerate progress towards SDG 4?

- For the 260 million out of school children, and those now further displaced due to COVID-19, what are the most relevant global lessons for improving access and learning outcomes?

In conducting this work, we will put policy and practice priorities and challenges first, focusing on the needs and questions of country-level decision-makers that are accountable for progress in the sector. See Appendix D for our initial hypothesis on these framing questions.

3. Culture and capacity strengthening: With the support of the Education.org Global Council, improving the sector’s incentives and infrastructure to use evidence for problem-solving

In our drive to ensure that knowledge is used, syntheses are essential, but not sufficient. The education sector lacks a dedicated problem-solving group that draws on the best available evidence and experience to accelerate progress for the most pressing education challenges globally. Therefore, we propose the formation of the Education.org Global Council: an assembly of deeply experienced global and national actors with a concrete track record of bridge building to address the knowing-doing gap, and with a broad range of expertise not often surfaced in traditional forums, including former education ministers and individuals from different industries and sectors such as implementation and improvement science, communications, media, and government learning experts. We will host and facilitate this council of seasoned problem-solvers.

To strengthen the capacity and culture around evidence-informed decision-making in education, we will also, in partnership with government and civil society leaders, invest in training, coaching, and facilitation. We will nurture a pipeline of talent through competitive grants and fellowships complemented by mentoring, specifically targeting areas of greatest need in the accessibility and use of evidence in national policy processes and implementation.

Join us!